Hash tables! They're everywhere. They're also pretty boring, but I've had GLib issue #1198 sitting around for a while, and the GNOME move to GitLab resulted in a helpful reminder (or two) being sent out that convinced me to look into it again with an eye towards improving GHashTable and maybe answering some domain-typical questions, like "You're using approach X, but I've heard approach Y is better. Why don't you use that instead?" and "This other hash table is 10% faster in my extremely specific test. Why is your hash table so bad?".

And unfairly paraphrased as those questions may be, I have to admit I'm curious too. Which means benchmarks. But first, what exactly makes a hash table good? Well, it depends on what you're looking for. I made a list.

A miserable pile of tradeoffs

AKA hard choices. You have to make them.

Simple keys vs. complex keys: The former may be stored directly in the hash table and are quick to hash (e.g. integers), while the latter may be stored as pointers to external allocations requiring a more elaborate hash function and additional memory accesses (e.g. strings). If you have complex keys, you'll suffer a penalty on lookups and when the hash table is resized, but this can be avoided by storing the generated hash for each key. However, for simple keys this is likely a waste of memory.

Size of the data set: Small data sets may fit entirely in cache memory. For those, a low instruction count can be beneficial, but as the table grows, memory latency starts to dominate, and you'll get away with more complex code. The extra allowance can be used to improve cache efficiency, perhaps with bucketization or some other fancy scheme. If you know the exact size of the data set in advance, or if there's an upper bound and memory is not an issue, you can avoid costly resizes.

Speed vs. memory generally: There are ways to trade off memory against speed directly. Google Sparsehash is an interesting example of this; it has the ability to allocate the table in small increments using many dynamically growing arrays of tightly packed entries. The arrays have a maximum size that can be set at compile time to yield a specific tradeoff between access times and per-entry memory overhead.

Distribution robustness: A good hash distribution is critical to preventing performance oopsies and, if you're storing untrusted input, thwarting deliberate attacks. This can be costly in the average case, though, so there's a gradient of approaches with power-of-two-sized tables and bitmask moduli at the low end, more elaborate hash functions and prime moduli somewhere in the middle, and cryptographically strong, salted hashes at the very high end. Chaining tables have an additional advantage here, since they don't have to deal with primary clustering.

Mode of operation: Some tables can do fast lookups, but are slower when inserting or deleting entries. For instance, a table implementing the Robin Hood probing scheme with a high load factor may require a large number of entries to be shifted down to make room for an insertion, while lookups remain fast due to this algorithm's inherent key ordering. An algorithm that replaces deleted entries with tombstones may need to periodically re-hash the entire table to get rid of those. Failed lookups can be much slower than successful ones. Sometimes it makes sense to target specific workloads, like the one where you're filtering redundant entries from a dataset and using a hash table to keep track of what you've already seen. This is pretty common and requires insertion and lookup to be fast, but not deletion. Finally, there are more complex operations with specific behavior for key replacement, or iterating over part of the dataset while permuting it somehow.

Flexibility and convenience: Some implementations reserve part of the keyspace for internal use. For instance, Google Sparsehash reserves two values, one for unused entries and one for tombstones. These may be -1 and -2, or perhaps "u" and "t" if your keys are strings. In contrast, GHashTable lets you use the entire keyspace, including storing a value for the NULL key, at the cost of some extra bookkeeping memory (basically special cases of the stored hash field — see "complex keys" above). Preconditions are also nice to have when you slip up; the fastest implementations may resort to undefined behavior instead.

GC and debugger friendliness: If there's a chance that your hash table will coexist with a garbage collector, it's a good idea to play nice and clear keys and values when they're removed from the table. This ensures you're not tying up outside memory by keeping useless pointers to it. It also lets memory debuggers like Valgrind detect more leaks and whatnot. There is a slight speed penalty for doing this.

Other externalities: Memory fragmentation, peak memory use, etc. goes here. Open addressing helps with the former, since the hash table then only makes a few big allocations which the allocator can satisfy with mmap() and simply munmap() when they're not needed anymore. Chaining hash tables make lots of heap allocations, which can get interspersed with other allocations leaving tiny holes all over when freed. Bad!

A note on benchmarks

This isn't the first time, or the second time, or likely even the 100th time someone posts a bunch of hash table benchmarks on the Internet, but it may be the second or third time or so that GHashTable makes an appearance. Strange maybe, given its install base must number in the millions — but on the other hand, it's quite tiny (and boring). The venerable hash table benchmarks by Nick Welch still rule the DuckDuckGo ranking, and they're useful and nice despite a few significant flaws: Notably, there's a bug that causes the C++ implementations to hash pointers to strings instead of the strings themselves, they use virtual size as a measure of memory consumption, and they only sample a single data point at the end of each test run, so there's no intra-run data.

He also classifies GHashTable as a "Joe Sixpack" of hash tables, which is uh… pretty much what we're going for! See also: The Everyman's Hash Table (with apologies to Philip).

Anyway. The first issue is easily remedied by using std::string in the C++ string tests. Unfortunately, it comes with extra overhead, but I think that's fair when looking at out-of-the-box performance.

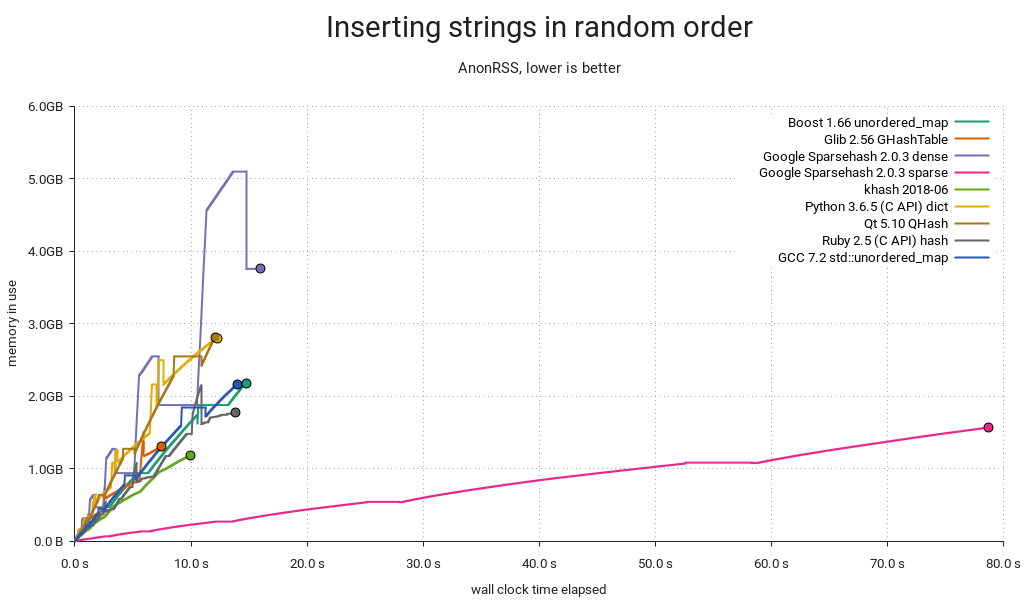

The second issue is a bit murkier, but it makes sense to measure the amount of memory that the kernel has to provide additional physical backing for at runtime, i.e. memory pages dirtied by the process. This excludes untouched virtual memory, read-only mappings from shared objects, etc. while still capturing the effects of allocator overhead and heap fragmentation. On Linux, it can be read from /proc/<pid>/smaps as the sum of all Private_Dirty and Swap fields. However, polling this file has a big impact on performance and penalizes processes with more mappings unfairly, so in the end I settled on the AnonRSS field from /proc/<pid>/status, which works out to almost the same thing in practice, but with a lower and much more fair penalty.

In order to provide a continuous stream of samples, I wrote a small piece of C code that runs each test in a child process while monitoring its output and sampling AnonRSS a hundred times or so per second. Overkill, yes, but it's useful for capturing those brief memory peaks. The child process writes a byte to stdout per 1000 operations performed so we can measure progress. The timestamps are all in wall-clock time.

The tests themselves haven't changed that much relative to Welch's, but I added some to look for worst-case behavior (spaced integers, aging) and threw in khash, a potentially very fast C hash table. I ran the below benchmarks on an Intel i7-4770 Haswell workstation. They should be fairly representative of modern 64-bit architectures.

Some results

As you may have gathered from the above, it's almost impossible to make an apples-to-apples comparison. Benchmarks don't capture every aspect of performance, and I'm not out to pick on any specific implementation, YMMV, etc. With that in mind, we can still learn quite a bit.

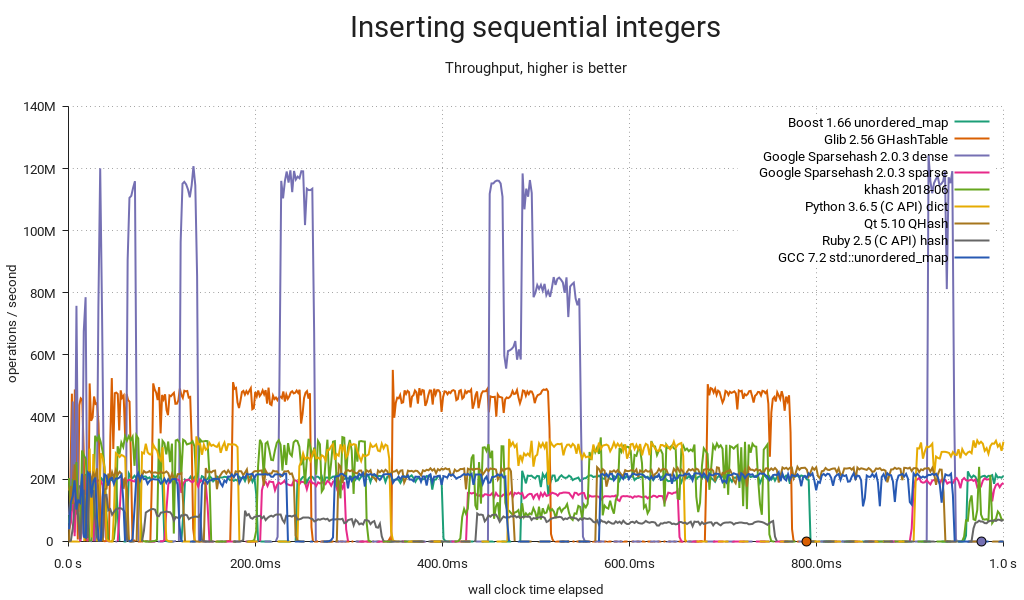

"Sequential" here means {1, 2, …}. The integer tests all map 64-bit integers to void *, except for the Python one, which maps PyLong_FromLong() PyObjects to a single shared PyObject value created in the same manner at the start of the test. This results in an extra performance hit from the allocator and type system, but it's a surprisingly good showing regardless. Ruby uses INT2FIX() for both, which avoids extra allocations and makes for a correspondingly low memory overhead. Still, it's slow in this use case. GHashTable embeds integers in pointers using GINT_TO_POINTER(), but this is only portable for 32-bit integers.

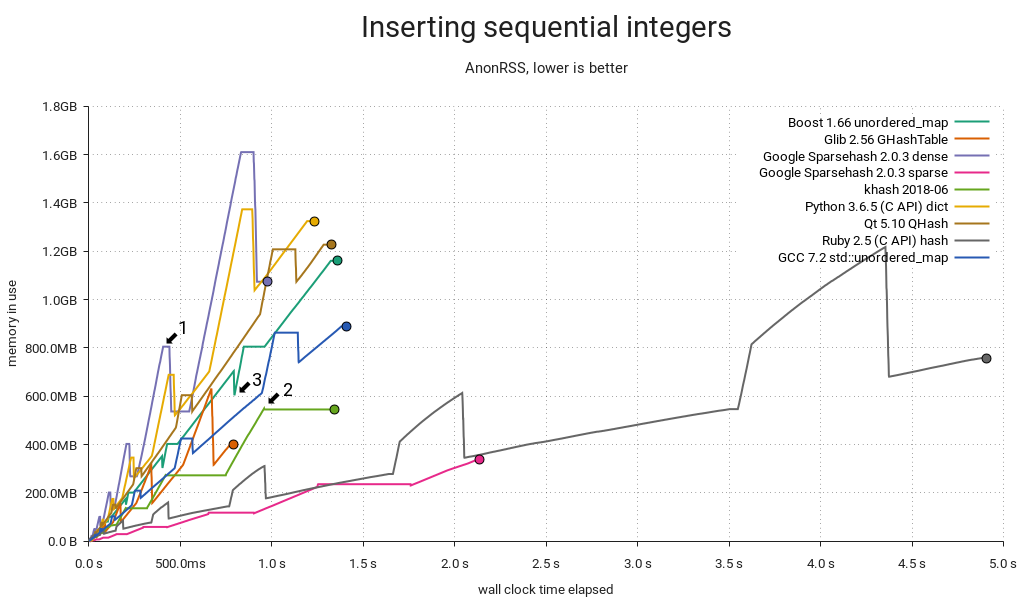

When a hash table starts to fill up, it must be resized. The most common way to do this is to allocate the new map, re-inserting elements from the old map into it, and then freeing the old map. Memory use peaks when this happens (1) because you're briefly keeping two maps around. I already knew that Google Sparsehash's sparse mode allows it to allocate and free buckets in small increments, but khash's behavior (2) surprised me — it realloc()s and rearranges the key and value arrays in-place using an eviction scheme. It's a neat trick that's probably readily applicable in GHashTable too.

Speaking of GHashTable, it does well. Suspiciously well, in fact, and the reason is g_direct_hash() mapping input keys {1, 2, …} to corresponding buckets {1, 2, …} hitting the same cache lines repeatedly. This may sound like a good thing, but it's actually quite bad, as we'll see later in this post. Normally I'd expect khash to be faster, but its hash function is not as cache-friendly:

#define kh_int64_hash_func(key) (khint32_t)((key)>>33^(key)^(key)<<11)

Finally, it's worth mentioning Boost's unique allocation pattern (3). I don't know what's going on there, but if I had to guess, I'd say it keeps supplemental map data in an array that can simply be freed just prior to each resize.

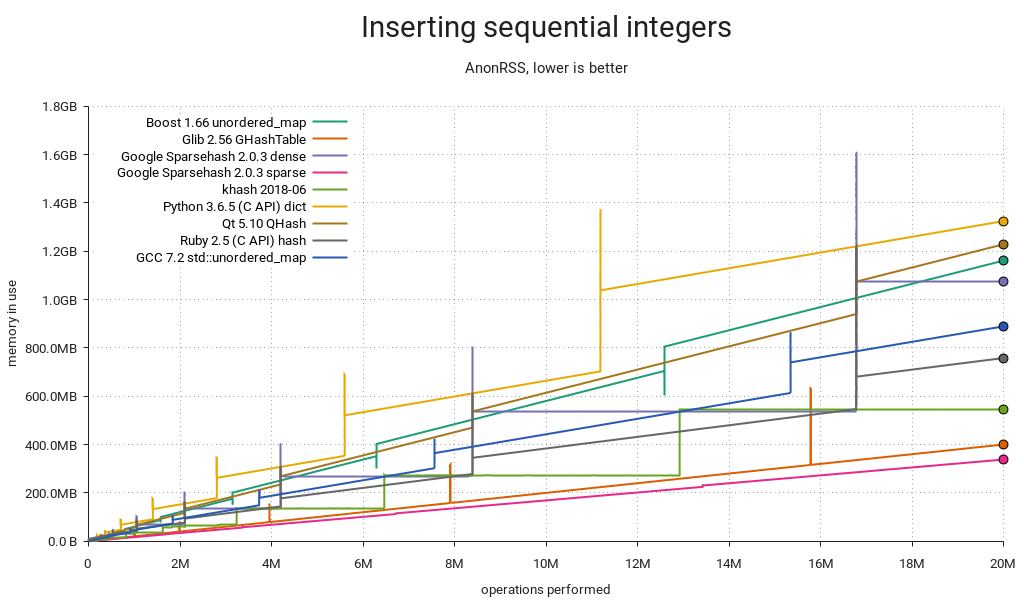

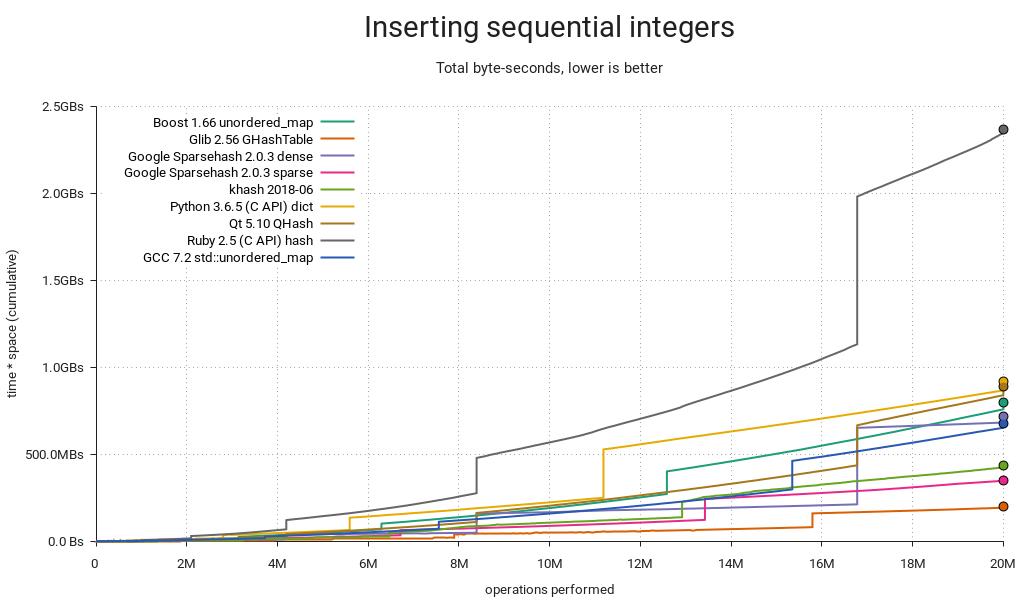

This is essentially the same plot, but with the time dimension replaced by insertion count. It's a good way to directly compare the tables' memory use over their life cycles. Again, GHashTable looks good due to its dense access pattern, with entries concentrated in a small number of memory pages.

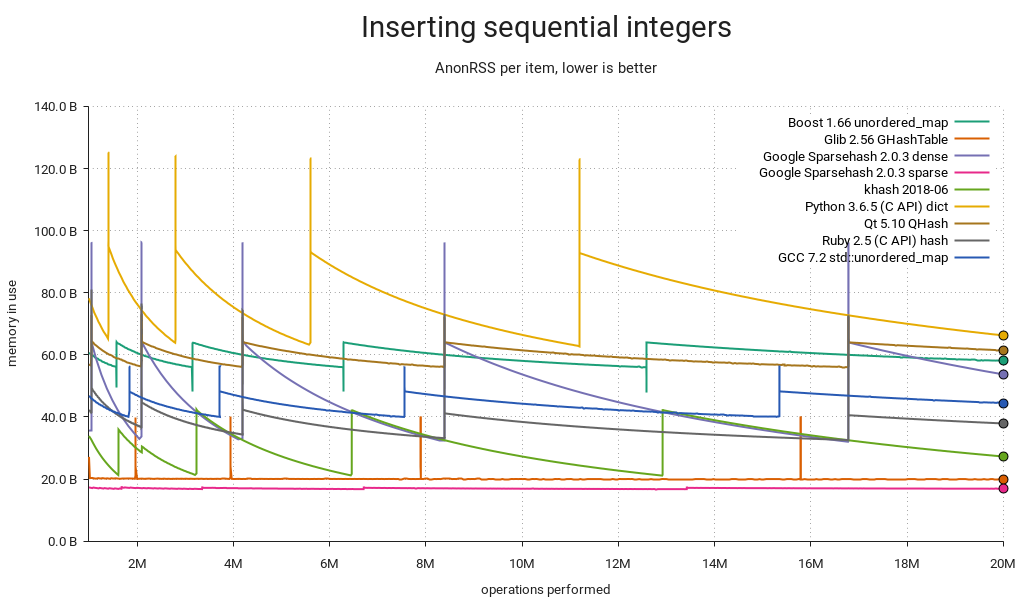

Yet another way of slicing it. This one's like the previous plot, but the memory use is per item held in the table. The fill-resize cycles are clearly visible. Sparsehash (sparse) is almost optimal at ~16 bytes per item, while GHashTable also stores its calculated hashes, for an additional 4 bytes.

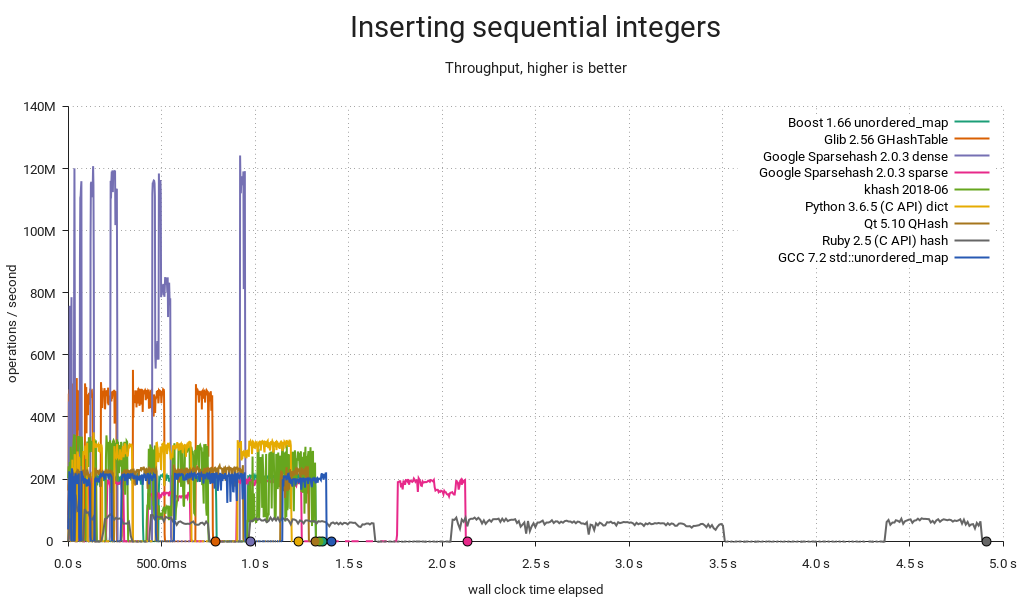

Still noisy even after gnuplot smoothing, this is an approximation of the various tables' throughput over time. The interesting bits are kind of squeezed in there at the start of the plot, so let's zoom in…

Hash table operations are constant time, right? Well — technically yes, but if you're unlucky and your insertion triggers a resize, you may end up waiting for almost as long as all the previous insertions put together. If you need predictable performance, you must either find a way to set the size in advance or use a slower-and-steadier data structure. Also noteworthy: Sparsehash (dense) is by far the fastest at peak performance, but it struggles a bit with resizes and comes in second.

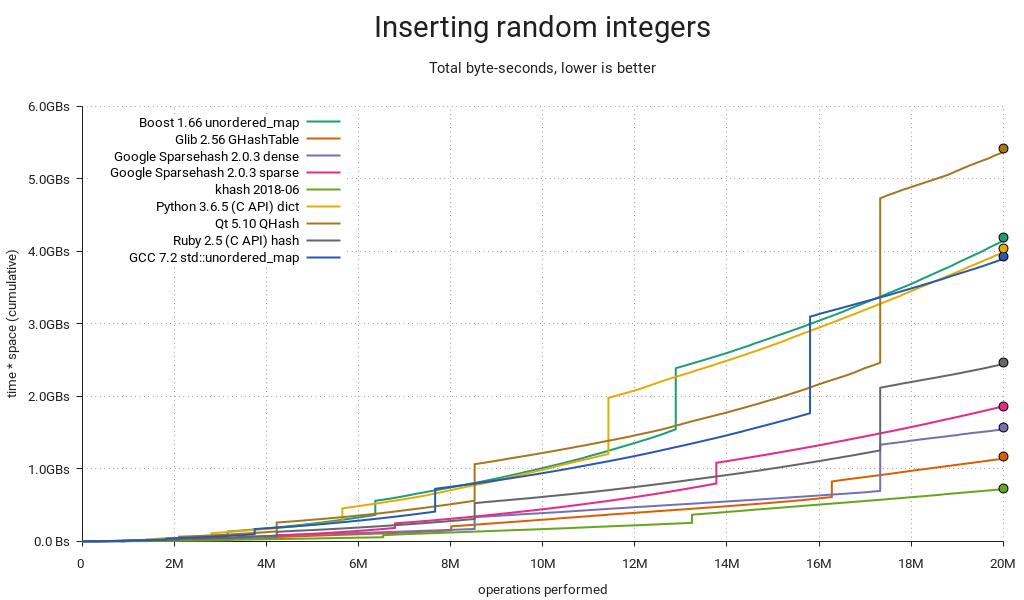

This one merits a little extra explanation: Some hash tables are fast and others are small, but how do you measure overall resource consumption? The answer is to multiply time by memory, which gets you a single dimension measured in byte-seconds. It's more abstract, and it's not immediately obvious if a particular Bs measurement is good or bad, but relatively it makes a lot of sense: If you use twice as much memory as your competitor, you can make up for it by being twice as fast. Since both resources are affected by diminishing returns, a good all-rounder like GHashTable should try to hit the sweet spot between the two.

Memory use varies over time, and consequently it's not enough to multiply the final memory consumption by total run time. What you really want is the area under the graph in the first plot (memory use over time), and you get there by summing up all the little byte-second slices covered by your measurements. You plot the running sum as you sweep from left to right, and the result will look like the above.

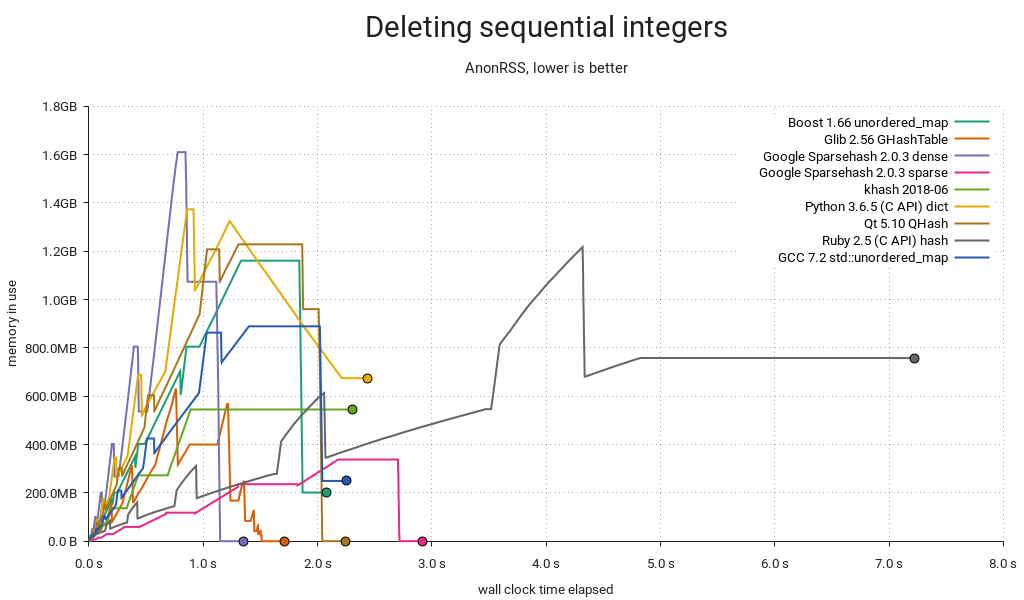

It's not enough to just insert items, sometimes you have to delete them too. In the above plot, I'm inserting 20M items as before, and then deleting them all in order. The hash table itself is not freed. As you can probably tell, there's a lot going on here!

First, this is unfair to Ruby, since I suspect it needs a GC pass and I couldn't find an easy way to do it from the C API. Sorry. Python's plot is weird (and kind of good?) — it seems like it's trimming memory in small increments. In GLib land, GHashTable dutifully shrinks as we go, and since it keeps everything in a handful of big allocations that can be kept in separate memory maps, there is minimal heap pollution and no special tricks needed to return memory to the OS. Sparsehash only shrinks on insert (this makes it faster), so I added a single insert after the deletions to help it along. I also put in a malloc_trim(0) without which the other C++ implementations would be left sitting on a heap full of garbage — but even with the trim in, Boost and std::unordered_map bottom out at ~200MB in use. khash is unable to shrink at all; there's a TODO in the code saying as much.

You may have noticed there are horizontal "tails" on this one — they're due to a short sleep at the end of each test to make sure we capture the final memory use.

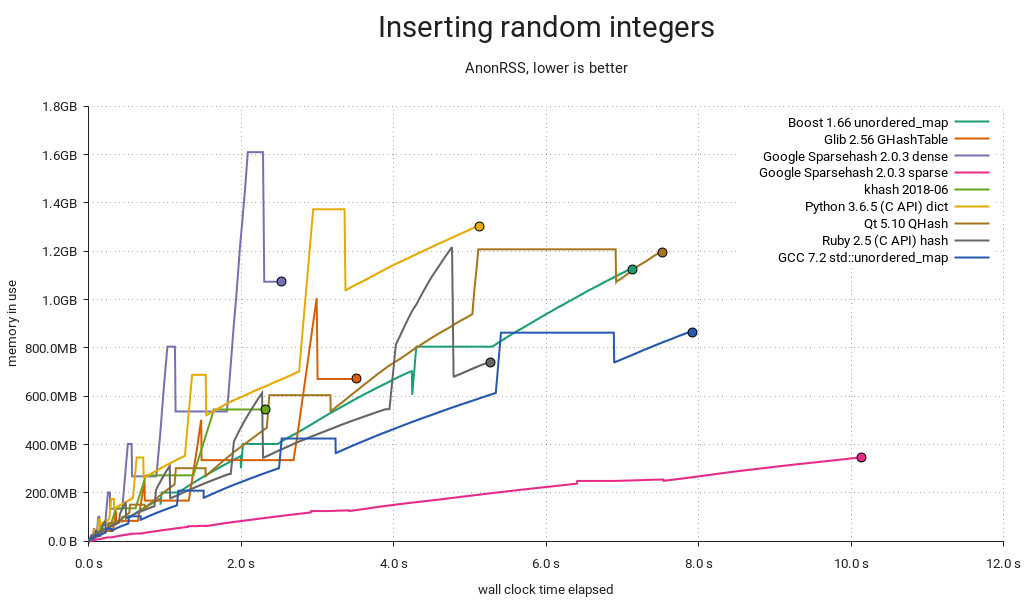

Another insertion test, but instead of a {1, 2, …} sequence it's now integers pseudorandomly chosen from the range [0 .. 2²⁸-1] with a fixed seed. Ruby performs about the same as before, but everyone else got slower, putting it in the middle of the pack. This is also a more realistic assessment of GHashTable. Note that we're making calls to random() here, which adds a constant overhead — not a big problem, since it should affect everyone equally and we're not that interested in absolute timings — but a better test might use a pregenerated input array.

GHashTable is neck-and-neck with Sparsehash (dense), but they're both outmatched by khash.

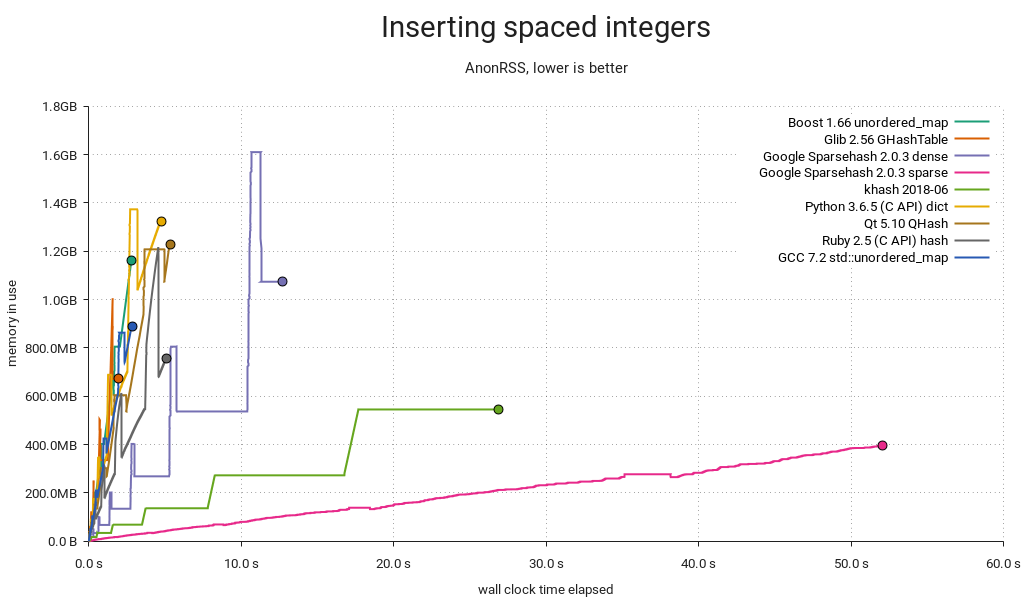

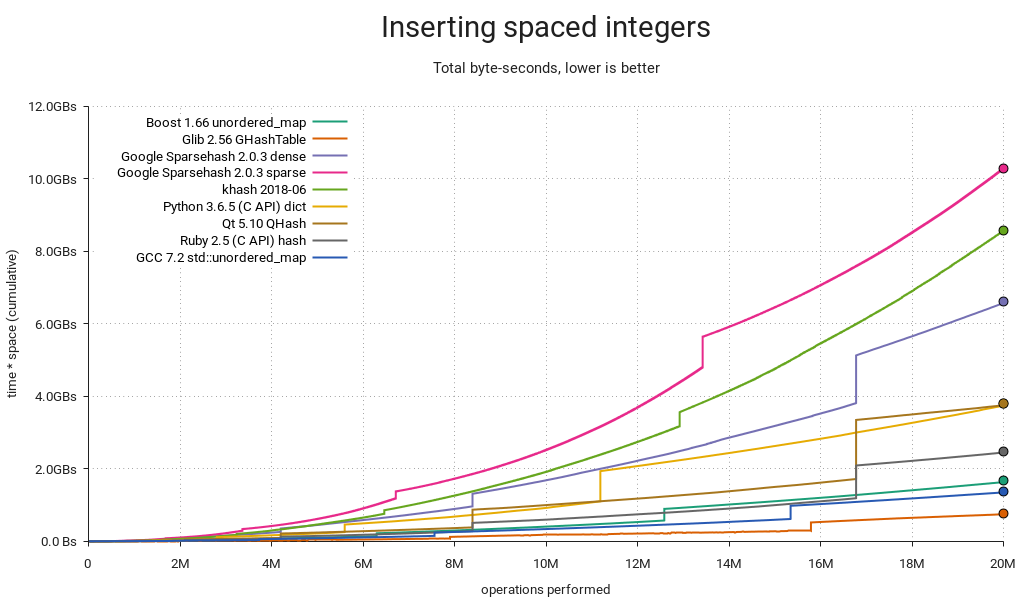

With spaced integers in the sequence {256, 512, 768, …}, the wheels come off Sparsehash and khash. Both implementations use table sizes that are powers of two, likely because it makes the modulo operation faster (it's just a bitmask) and because they use quadratic probing, which is only really efficient in powers of two. The downside is that integers with equal low-order bits will be assigned to a small number of common buckets forcing them to probe a lot.

GHashTable also uses quadratic probing in a power-of-two-sized table, but it's immune to this particular pattern due to a twist: For each lookup, the initial probe is made with a prime modulus, after which it switches to a bitmask. This means there is a small number of buckets unreachable to the initial probe, but this number is very small in practice. I.e. for the first few table sizes (8, 16, 32, 64, 128, 256), the number of unreachable buckets is 1, 3, 1, 3, 1, and 5. Subsequent probing will reach these.

So how likely is this kind of key distribution in practice? I think it's fairly likely. One example I can think of is when your keys are pointers. This is reasonable enough when you're keeping sets of objects around and you're only interested in knowing whether an object belongs to a given set, or maybe you're associating objects with some extra data. Alignment ensures limited variation in the low-order key bits. Another example is if you have some kind of sparse multidimensional array with indexes packed into integer keys.

Total resource consumption is as you'd expect. I should add that there are ways around this — Sparsehash and khash will both let you use a more complex hash function if you supply it yourself, at the cost of lower performance in the general case.

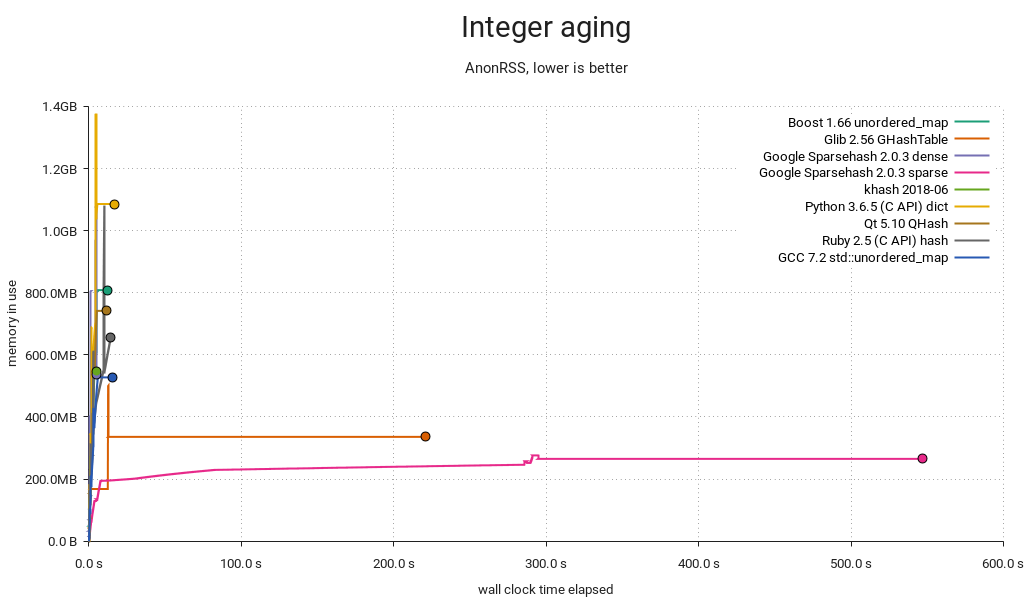

Yikes! This is the most complex test, consisting of populating the table with 20M random integers in the range 0-20M and then aging it by alternatingly deleting and inserting random integers for another 20M iterations. A busy database of objects indexed by integer IDs might look something like this, and if it were backed by GHashTable or Sparsehash (sparse), it'd be a painfully slow one. It's hard to tell from the plot, but khash completes in only 4s.

Remember how I said GHashTable did suspiciously well in the first test? Well,

We're paying the price. When there are a lot of keys smaller than the hash table modulus, they tend to concentrate in the low-numbered buckets, and when tombstones start to build up it gets clogged and probes take forever. Fortunately this is easy to fix — just multiply the generated hashes by a small, odd number before taking the modulo. The compiler reduces the multiplication to a few shifts and adds, but cache performance suffers slightly since keys are now more spread out.

On a happier note…

All the tests so far have been on integer keys, so here's one with strings of the form "mystring-%d" where the decimal part is a pseudorandomly chosen integer in the range [0 .. 2³¹-1] resulting in strings that are 10-19 bytes long. khash and GHashTable, being the only tables that use plain C strings, come out on top. Ruby does okay with rb_str_new2(), Python and QHash are on par using PyDict_SetItemString() and QString respectively. The other C++ implementations use std::string, and Sparsehash is really not loving it. It almost looks like there's a bug somewhere — either it's copying/hashing the strings too often, or it's just not made for std::string. My impression based on a cursory search is that people tend to write their own hash functions/adapters for it.

GHashTable's on its home turf here. Since it stores the calculated hashes, it can avoid rehashing the keys on resize, and it can skip most full key compares when probing. Longer strings would hand it an even bigger advantage.

Conclusion

This post is really all about the details, but if I had to extract some general lessons from it, I'd go with these:

- Tradeoffs, tradeoffs everywhere.

- Worst-case behavior matters.

- GHashTable is decent, except for one situation where it's patently awful.

- There's some room for improvement in general, cf. robustness and peak memory use.

My hash-table-shootout fork is on Github. I was going to go into detail about potential GHashTable improvements, but I think I'll save that for another post. This one is way too long already. If you got this far, thanks for reading!

Update: There's now a follow-up post.

I'm not sure if you want contributions, but I've opened a PR for Rust's HashMap:

https://github.com/hpjansson/hash-table-shootout/pull/1

It doesn't shrink automatically, so that shows up on the delete tests, but you can manually call shrink_to_fit() in cases where this affects you. It's also not performing so well on the sequential integers, but I'm not sure why.

Just merged it. Thanks!

Strange that it would perform less well on sequential integers. As far as I know, it has a very high-end hash function, so the distribution should be good. But it uses Robin Hood probing, so maybe it has to do more work to make room for insertions? In my experience, quadratic probing performs better at the same load factor since there's less clustering.