I promised a closer look at GHashTable and ways to improve it; here's that look and another batch of benchmarks to boot.

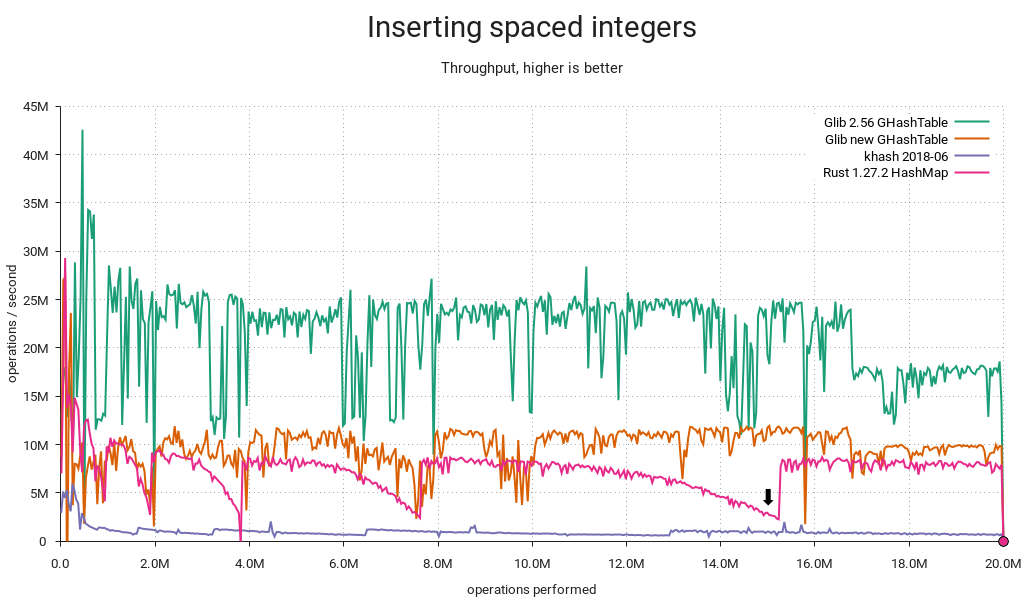

This time around I've dropped most of the other tables from the plots, keeping only khash and adding results from my GLib branch and Rust's HashMap, the latter thanks to a pull request from Josh Stone. These tables have closely comparable performance and therefore provide a good reference. Besides, every table tested previously is either generally slower or more memory-hungry (or both), and including them would compress the interesting parts of the plot.

I'll try to be brief this time¹. For more background, check out my previous post.

Bad distribution

First and foremost, the distribution was terrible with densely populated integer keyspaces. That's taken care of with a small prime multiplier post-hash.

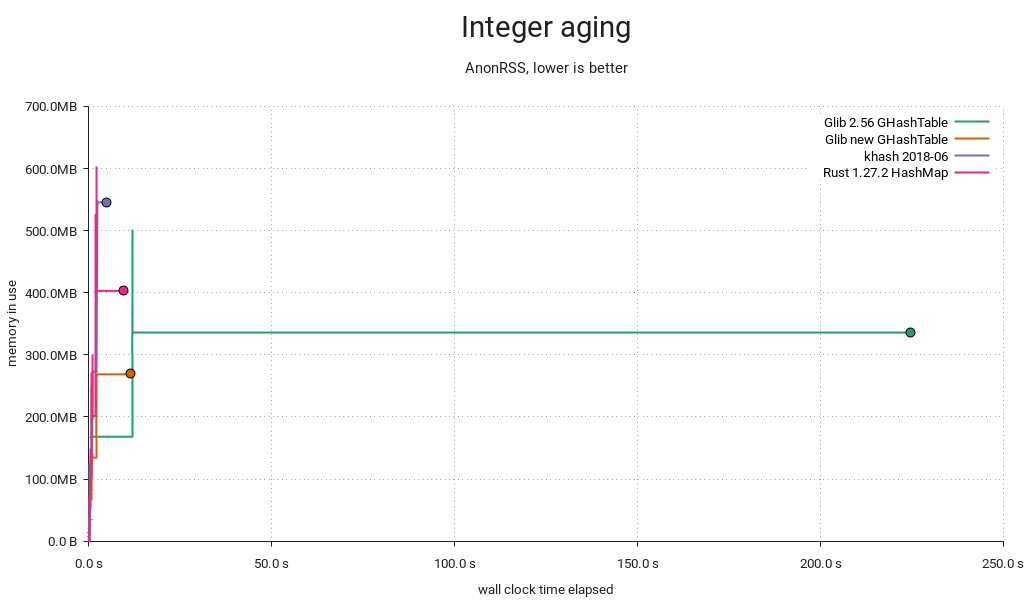

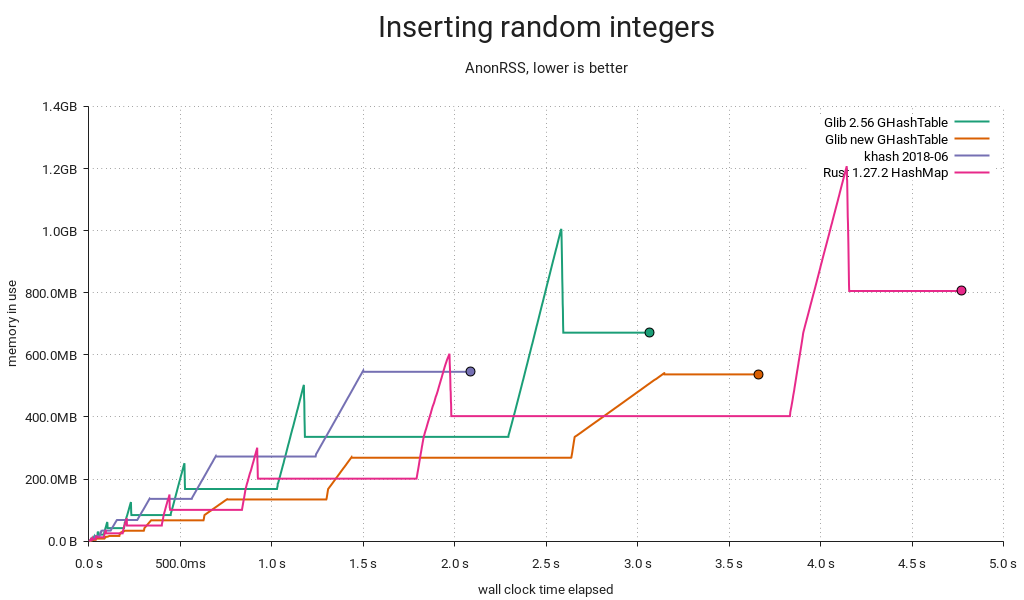

Peak memory waste

Previously, we'd resize by allocating new arrays and reinserting the entries, then freeing the old arrays. We now realloc() and rearrange the entries in place, lowering peak memory use by about a third. This can prevent going into swap or even crashing out on a memory-constrained system.

Overall memory waste

If you've got a sharp eye, you'll notice that overall memory consumption is lower now too. Whereas the old implementation always made space for 64-bit keys and values, the new one will allocate 32 bits when possible and switch to bigger entries on demand. In the above test, the keys are integers in the range [0 .. 2³²-1], reducing memory consumption by 20% overall. If values had been in [0 .. 2³²-1] too, the reduction would've amounted to 40%. A caveat though — negative integers (e.g. from GINT_TO_POINTER()) still require 64 bits due to two's complement/sign extension.

Load factor mistargeting

When GHashTable is subjected to churn/aging, it will accumulate tombstones, and eventually the sum of entries and tombstones will eclipse the maximum load, resulting in a cleanup. Since a cleanup is just a reinsertion in place, it's handled similarly to a resize, and we take the opportunity to pick a better size when this happens. Unfortunately, the grow threshold was set at .5 if the table got filled up the rest of the way by tombstones, resulting in post-grow load factors as low as .25. That's equal to the shrink threshold, so with a little (bad) luck it'd shrink back immediately afterwards.

I changed the threshold to .75, so the load factor intervals (not counting tombstones) now look like this:

- <.25 → shrink immediately → .5

- [.25 .. .75] → no change

- [.75 .. .9375] → grow on cleanup → [.375 .. .46875]

- >.9375 → grow immediately → .46875

This seems like a more reasonable stable range with less opportunity for fluctuation and waste, and there's still lots of headroom for tombstones, so cleanups aren't too frequent.

But it's slower now?

In some cases, yes — can't be helped. It's well worth it, though. And sometimes it's faster:

This particular run uses less memory than before, which is puzzling at first, since keys and values are both pointers. A look at the test's smaps reveals the cause:

01199000-08fb8000 rw-p 00000000 00:00 0 [heap]

The heap happened to be mapped in the lower 4GiB range, and GHashTable can now store 32-bit entries efficiently. That means pointers too.

I caught khash doing something interesting in this benchmark. Some time after the aging cycle has started (1), it initiates a tombstone cleanup. In this case it decides to grow the table simultaneously, starting at (2). This could be an example of the kind of load factor mistargeting I mentioned above — certainly it would have a very low load factor for the remainder of the test.

Robin Hood to the rescue?

Short answer: No. Long answer:

Rust uses Robin Hood probing, and the linear shifts required for insertion and deletion start to get expensive as the table fills up. It also came in last in a lookup-heavy load I ran. On the other hand it avoids tombstones, so there's no need for periodic cleanups, and deletions will make it progressively faster instead of slower. GHashTable's quadratic probing seems to hold a slight edge, albeit workload-dependent and, well, slight. In any case, I couldn't find a compelling reason to switch.

What about attack resistance?

The improved GHashTable is much more resistant to accidental misbehavior. However, it wouldn't be too hard to mount a deliberate attack resulting in critically poor performance². That's what makes Rust's HashMap so interesting; it gets its attack resistance from SipHash, and if these benchmarks are anything to go by, it still performs really well overall. It's only slightly slower and adds a reasonable 4 bytes of overhead per item relative to GHashTable, presumably because it's storing 64-bit SipHash hashes vs. GHashTable's 32-bit spit and glue.

I think we'd do well to adopt SipHash, but unfortunately GHashTable can't support keyed hash functions without either backwards-incompatible API changes or a hacky scheme where we detect applications using the stock g_str_hash() etc. hashers and silently replace them with calls to corresponding keyed functions. For new code we could have something like g_hash_table_new_keyed() accepting e.g. GKeyedHasher.

A better option might be to add a brand new implementation and call it say, GHashMap — but we'd be duplicating functionality, and existing applications would need source code changes to see any benefit.

¹ Hey, at least I tried.

² If you can demo this on my GLib branch, I'll send you a beer dogecoin nice postcard as thanks. Bonus points if you do it with g_str_hash().

Using SipHash for "attack resistance" is pure security theatre. I wouldn't bother changing the API for such a false concern. Falling back to a tree in the case of excessive collisions is a much better, non-theatrical solution to DoS attacks.

…and falling back to a tree should be the concern of application developers, not the hash table library.

Hi, thanks for the feedback. I agree that hash tables are often used where other data structures could do a better job, and that we tend to focus too much on them in general. Maybe it's a bit of a vicious cycle where application authors overuse hash tables, platform developers respond by pouring more resources into scraping that particular barrel, which again makes it more attractive to applications, etc.

If you have any pointers showing how SipHash is fundamentally unsound, I'd be very interested to see them. The paper [1] specifically suggests that hash tables switch to it for the added security. It's very widely used by now. I haven't revisited it since 2018, though, so I'm open to the possibility that things have changed in the meantime.

[1] http://cr.yp.to/siphash/siphash-20120918.pdf